As experts from across the world gather in Edinburgh the spotlight is on our expertise in robotics

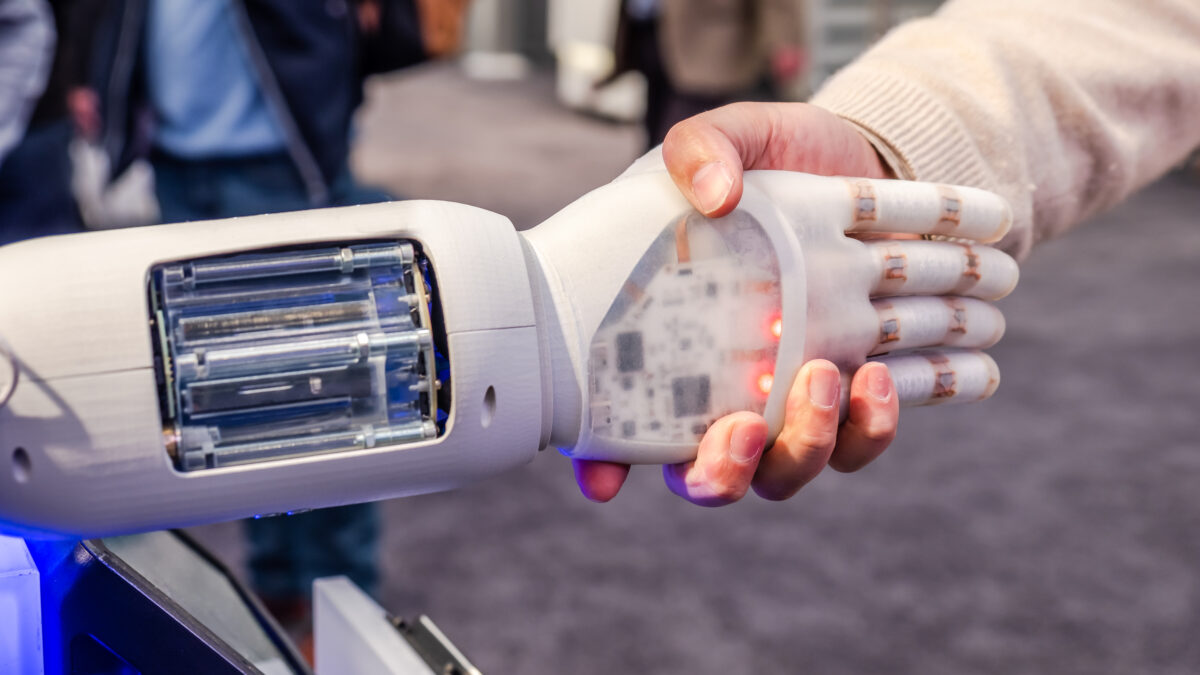

Researchers in Scotland have developed expertise in robotics that positions the country as a leader in the field. Technologies that make robots sensitive to touch, that could allow them to express human-like emotions, and that enable them to recognise their surroundings, are being developed here. The breadth of work being carried out ranges from the automation of GP prescriptions to an academic collaboration exploring the possibility that the distinction between humans and robots may one day disappear.

This week, Heriot-Watt University and the Edinburgh Centre for Robot- ics, a joint project with the University of Edinburgh, are co-hosting the European Robotics Forum (ERF 2017) at the EICC. The audience at this major European conference includes researchers, engineers, managers, entrepreneurs, and public and private sector investors in robotics research and development. The forum’s objectives are to identify the potential of robotics applications for business, job creation and society, discuss breakthroughs in applications, learn about new business opportunities and initiatives and influence decision makers and strengthen collaboration in the robotics community.

At Heriot-Watt, Professor Oliver Lemon and his team are developing technology to enable robots

and computers to interact naturally with humans, using combinations of speech, gesture, movements and facial expressions. Its Interaction Lab is one of the few places in the world that has pioneered the use of machine learning methods to develop conversational language interfaces. This means that machines can now learn how to have dialogues with people and better understand and generate natural human language. Ultimately, this research will develop new interfaces and robots which are easier, quicker, and more enjoyable to use. The Interaction Lab has worked with companies including Yahoo, Orange, and BMW to solve problems in conversational interaction with machines.

It Is breakIng new ground in the development of robots that can learn from human language and how to interact socially with humans. The team was successful in a 2015 European Commission Horizon 2020 funding call which has resulted in ‘Pepper’, Heriot-Watt’s resident robot. Pepper exhibits behaviour that is “socially appropriate, combining speech-based interaction with non-verbal communication and human-aware navigation”. It is one of several initiatives indicative of Scotland’s expertise.

A team at Glasgow University has devised an electronic skin for use in in robotics, prosthetics and wearable systems, that provides tactile and haptic feedback. The technology was conceived and developed by Dr Ravinder Dahiya, a reader in electronic and nanoscale engineering, as part of a project funded by the Engineering and Physical Sciences Research Council (EPSRC). It began life in 2014 as a response to the limitations of robot technologies then in existence.

“Interfacing the multidisciplinary fields of robotics and nanotechnology, this research on ultra-flexible tactile skin will open up whole new areas within both robotics and nanotechnology,” said Dahiya at the time of the time of the EPSRC award. “So far, robotics research has focused on using dexterous hands, but if the whole body of a robot is covered with skin, it will be able to carry out tasks like lifting an elderly person.

“Today’s robots can’t feel the way we feel. But they need to be able to interact the way we do. As our demographic changes over the next 15-20 years, robots will be needed to help the elderly.” Robots would have skin so that they can feel whether a surface is hard or soft, rough or smooth. They would be able to feel weight, gauge heat and judge the amount of pressure being exerted in holding something or someone.

“In the nanotechnology field, it will be a new paradigm whereby nanoscale structures are used not for nanoscale [small] electronics, but for macroscale [large] bendable electronics systems. This research will also provide a much-needed electronics engineering perspective to the field of flexible electronics.” The research is aligned with wider work on flexible electronics; the creation of bendable displays for computers, tablets, mobile phones and health monitors.

It has led to a breakthrough in mate- rials science; the ability to produce large sheets of graphene – the ‘wonder’ material that is a single atom thick but is flexible, stronger than steel and capable of efficiently conducting heat and electricity – using a commercially available type of copper that is 100 times cheaper than the specialist type currently required. The resulting graphene also displayed ‘stark’ improvements in performance, making possible artificial limbs capable of providing sensation to their users.

Last year, Dahiya received an award from the Institute of Electrical and Electronics Engineers to honour researchers who have made an outstanding technical contribution to their chosen field, as documented by publications and patents. The award is the latest in a string of recent accolades for Dr Dahiya, which also include the 2016 International Association of Advanced Materials (IAAMM) Medal, the 2016 Microelectronic Engineering Young Investigator Award, and inclusion in the list of 2016 Scottish 40UNDER40. Work is also underway to make robots more human-like in their behaviour. Dr Oli Mival, a principal research fellow at Edinburgh Napier University, is an internationally recognised expert in human computer interaction, working in education, healthcare, industry and government. At a conference in St Andrews last month, organised by VisitScotland’s business events team, Mival spoke about how user experience design in computing could change simple interactions with robots into something more human-like.

Much of his work over the past decade has been focussed on the study of software in digital assistants and companions. He cited four which dominate the market; Amazon’s Alexa, “an embodiment in a physical object”, Microsoft’s Cortana, Apple’s Siri and Google Now, voice driven assistants on mobile phones. “That’s all great,” he said, “but what about if we move from software to the notion of embodied AI [artificial intelligence]?”

Human evolution has wired us to define what we think of as natural, said Mival, and we are used to the idea of brains being inside bodies. We respond differently to artificial personalities, whether they are represented physically or are screen based. With a robot, its representation of a human body puts it closer to being a peer: “The challenge then becomes,” said Mival, “how do we make robots less robotic?”

He used the example of the perceived difference in intelligence between cats and dogs. From a neu- rological and cognitive perspective, a cat is far less advanced than a dog; it lacks the fundamental neurological constructs that a dog has. “However, it has a behaviour that we attribute intelligence to. And this is a construct that humans use also, knowingly or unknowingly. The attribution that we give about people’s intelligence comes from extrapolation based on their behaviour.”

Mival said that this knowledge can be used to make a robot appear more human-like in its behaviour; so-called affective computing, where it can recognise, interpret, process and simulate human emotion. “We can start to have things that respond based on our understanding and the context of the world,” said Mival.

Also at the conference was Dr Philip Anderson, of the Scottish Association for Marine Science (SAMS), which has developed techniques to probe the winter-time polar atmosphere, including low-power autonomous remote systems on the earth surface and kite, blimp and rocket instrument platforms in the earth’s atmosphere. In the last 10 years, Anderson pioneered this use of small robotic aircraft for measuring the structure of the atmosphere near the surface. At SAMS, the techniques aid understanding of sea-ice dynamics in the Arctic and help explain the dramatic reduction in summer-time sea-ice coverage.

Another speaker, Dr Kasim Terzic, a teaching fellow at the University of St Andrews, outlined work being carried out on computer vision; the automatic extraction, analysis and understanding of information from a single image or a sequence of images. Terzic has published extensively on visual scene understanding, a field of research central to the ability of robots to understand and communicate with the world around them.

He said there is a big gap between the complexity of tasks that robots can perform, and their ability to understand the physical context they inhabit: “Why can’t we have robots perceive the world the way we do? Part of it is that humans are so good at understanding visual clues; we are capable of a deep semantic understanding when presented with a scene. Computers are not very good at doing this.”

A huge amount of work has been done over the years on object recognition, he said, more recently supported by the amount of visual data available on the web. Making this process more accurate and less draining in terms of processing power is an ongoing challenge. But the next step is to progress to a high level of reasoning, where recognised objects can be placed in context to provide a semantic understanding of what is happening. Recently, Terzic and colleagues succeeded in programming a robot to recognise a favourite toy and follow it, ignoring other toys.

“We are getting good at object recognition,” he said. “It’s not solved, but we are getting to the point where we really need to leverage context. We are not going to solve it by throwing more algorithms at it; there is the potential to apply deep learning [machine learning inspired by the human brain]. And [then] there is [the] transition to a cognitive robot which understands and learns. Our goal is where you can have a service robot that you buy and when you talk to it, and tell it what to do, it will react the same way a human would do.”

We need to talk about Wall-E

According to roboticist Rodney Brooks, the distinction between humans and robots will disappear in the coming decades. Arati Prabhakar, the former head of DARPA, America’s Defense Advanced Research Projects Agency, says the merging of humans and machines is happening now.

If so, are we prepared for such a union? How is the ongoing development and deployment of artificial and social robots bringing change in our lives and societies? The Anthrobotics Cluster at the University of Edinburgh was created last year to consider these questions from an interdisciplinary perspective, encompassing humanities, social sciences, informatics, robotics, genetics, engineering, law, medicine, science and technology studies.

It was initiated by Luis de Miranda, a philosopher and novelist; author of The Art of Freedom in the era of automata, a cultural history of digital devices and our relationship with computers. He has studied the notion of esprit de corps, looking at how we have in the last three centuries conceived of institutions, corporations and organisations in terms of social machines and of humans as robots.

His idea, an echo of philosopher Lewis Mumford’s ‘megamachine’, is that even before digital computers existed, we were ‘anthrobots’, “dynamic and dialectic compounds of humans and protocols”. He said:

“To make progress in the new field of social robotics, instead of looking at humans and robots as separated realms, we should start with their intertwined realities and look at anthrobotic ensembles.”

He has worked with Dr Michael Rovatsos and Dr Subramanian Ramamoorthy, of Edinburgh University’s Informatics Forum of the University of Edinburgh, to write a position paper published in the book What Social Robots Can and Should Do. Supported by the university’s Institute for Academic Development, de Miranda organised the first anthrobotics interdisciplinary seminar and reading group, in partnership with the social informatics group run by Edinburgh University research fellow Dr Mark Hartswood.

The cluster has explored questions raised by accelerated developments in artificial intelligence and robotics and fostered a dialogue between the humanities, social sciences, computer science and robotics. One of the outcomes has been a new definition of robots as ‘enablers’, drawing on the ambiva- lence of the notion of enablement in social psychology; something that can help and facilitate, but also create a dependency and even addiction.

Earlier this week, ahead of ERF 2017, a workshop was held at the university with scholars from European universities exploring the new field of anthrobotics, as well as a public round table on the theme: Are we (Becoming) Anthrobots?, in partnership with robot manufacturer Softbank and Eidyn research center.

“We are just at the beginning of our reflection,” said de Miranda. “Now that people are becoming aware that robotic systems are a part of our everyday lives and even our cultural and social identities, we must develop not so much better artificial intelligence, but rather better anthrobotic intelligence; more harmony, pluralism, and understanding in the way we deal with the digital machines that have merged with our lives.”

Privacy Overview

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.